A bunch of people were talking about this Nature Jobs article on the GRE this morning while I was proctoring the final for my intro E&M class, which provided a nice distraction. I posted a bunch of comments about it to Twitter, but as that’s awfully ephemeral, I figured I might as well collect them here. Which, purely coincidentally, also provides a nice way to put off grading this big stack of exam papers…

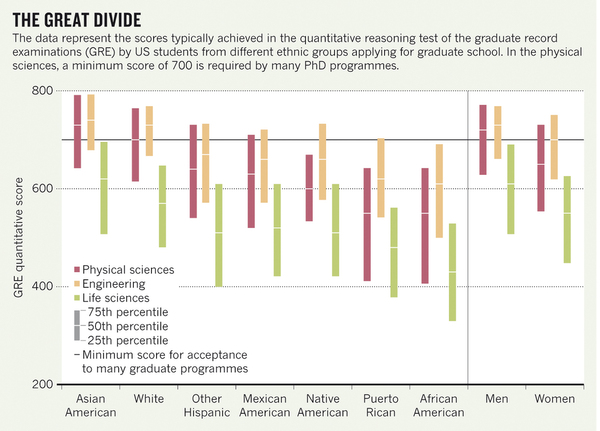

Anyway, the thrust of the article is that the GRE is a bad thing to be using as an admissions criterion for graduate school in science and engineering, because it has large disparities in scores for different demographic groups (the key graph is reproduced above). The authors push for a more holistic sort of system including interviews rather than an arbitrary-ish numerical cut-off on GRE scores, which, purely coincidentally, is what they do at a program they help run that has a good track record.

Some miscellaneous thoughts on this:

— It should be noted up front that this is very much an op-ed, not a research article in the general Nature mode. There are no references cited, and only occasional mentions of quantitative research. This is kind of disappointing, because I’m a great big nerd and would like to see more data.

— I am shocked– SHOCKED!– to hear that the GRE isn’t a great predictor of career success, which they attribute to William Sedlacek, probably alluding to research in this paper (PDF) among other things.

— The bulk of the piece is devoted to talking about race and gender gaps in GRE scores, focusing on the math section of the test. They repeatedly mention the use of a cut-off around 700 on the math section, and point out that this is a problem given that very few women and underrepresented minorities score this high.

This seems like an especially dubious technique, given that math scores would be only a small part of what you would like to measure for graduate admissions. And while I’ve heard rumors of GRE cut-offs in graduate admissions, I’ve always heard them discussed in the context of the subject tests more than the general exams. They don’t have data on those, though, probably because the statistics would be much worse due to the smaller number of test-takers.

(It should also be noted that this score cut-off is another unsourced number, and honestly, I’d be a little surprised if it could be definitively sourced, because that seems like lawsuit fodder to me. I doubt you’ll find any graduate school anywhere that will admit to using a hard cut-off for GRE scores– which doesn’t mean that it isn’t used in a more informal way, of course, but I’d be very surprised if anybody copped to using a specific number.)

— When I initially looked at the graph above, the thing that struck me was not just the big gaps between groups, but the fact that those gaps seemed to be smaller in physical sciences than in life sciences. That seemed surprising, as the life sciences are often held up as doing much better than physical sciences in terms of gender equity in particular.

On closer examination, the difference isn’t as big as my first look seemed to suggest– pixel-counting in GIMP puts the largest racial gap at 190-ish score points for life science and 180-ish for physical science, and the gender gap at 60 and 70, respectively. Not a significant difference.

— I will note, however, that biologists suck at math– average math GRE scores for life sciences are something like 110 points lower than those for physical sciences, comparable to the racial and gender gaps that they find. Which might in some sense mean that the gaps are worse (as a fraction of the overall score) in life sciences. But then, I’m not sure that’s the right thing to look at.

— When talking about the use of GRE scores as a cut-off, they write:

This problem is rampant. If the correlation between GRE scores and gender and ethnicity is not accounted for, imposing such cut-offs adversely affects women and minority applicants. For example, in the physical sciences, only 26% of women, compared with 73% of men, score above 700 on the GRE Quantitative measure. For minorities, this falls to 5.2%, compared with 82% for white and Asian people.

The misuse of GRE scores to select applicants may be a strong driver of the continuing under-representation of women and minorities in graduate school. Indeed, women earn barely 20% of US physical-sciences PhDs, and under-represented minorities — who account for 33% of the US university-age population — earn just 6%. These percentages are striking in their similarity to the percentage of students who score above 700 on the GRE quantitative measure.

That last sentence reads as slightly snotty to me, in a “I’m going to insinuate a causal relationship that I can’t back up” kind of way. So I will point out that the percentage of women earning Ph.D.’s in physics is also strikingly similar– according to statistics from the American Institutes of Physics— to the percentage of women earning undergraduate degrees in physics (both around 20% in the most recent reports), and for that matter the percentage of women hired into faculty positions in physics (around 25%). Just, you know, as long as we’re pointing out numerical similarities.

— I would be all in favor of de-emphasizing the GRE in favor of other things, not least because our students tend not to score all that highly on the Physics subject test. (And, for that matter, I didn’t score all that highly on it, back in the day– I was in the 50th percentile, far and away the lowest percentile score I ever had on a standardized test.) Their call for considering a wider range of factors is something eminently sensible, and I would be all for it.

The trouble with this is buried in a passing remark in talking about the score disparities:

These correlations and their magnitude are not well known to graduate-admissions committees, which have a changing rota of faculty members.

Unlike undergraduate admissions, which is generally handled by full-time staffs of people who do nothing but make admissions decisions, graduate admissions decisions are more likely to be made by faculty who are stuck reading applications as part of their departmental service. And I think that, more than “a deep-seated and unfounded belief that these test scores are good measures of ability” (quoting from the penultimate paragraph), explains the use of the GRE. To whatever extent GRE scores get used as a threshold for admissions, it’s not because people believe they’re a good indicator of anything, but because it’s quick and easy, a simple numerical way to winnow down the applicant pool and let the faculty get back to doing the things they regard as more important.

(To be fair, my knowledge of the details of the graduate admissions process is very limited, as we don’t have a grad program. I’m going off conversations I’ve had with people at larger schools, which may be skewed by people who are blowing off steam by kvetching at conferences.)

Ultimately, I think that’s going to be the sticking point when it comes to implementing alternative measures. A more holistic approach would take more time and effort, and that’s not going to be popular with a wide range of faculty. You can probably implement that sort of thing in departments where enough people buy in to the idea, but that doesn’t tend to be either scalable or sustainable.

Then other approach is to emulate undergrad admissions, and have full-time dedicated staffs for this kind of thing, shifting away from the “changing rota of faculty” to people whose job depends on doing admissions properly. But that requires a significant committment of resources to a non-research activity, which again is kind of a hard sell.

Anyway, that’s what I occupied myself with when I was bored during this morning’s exam, and typing this out has gotten me clear through to lunchtime without having to grade anything. Woo-hoo!

It’s a hard problem. One alternative I can think of offhand, which I hear exists informally in many departments, is that if a student knows what professor she wants to work with and the professor wants to work with her, she is more likely to get in than the general applicants who don’t know who they want to work with because the professor will advocate for her admission. But this approach may be even worse than overreliance on GRE scores: if my goal were to come up with a way to perpetuate an old boys’ network in graduate admissions, I’d be hard pressed to come up with something more effective. The end game of making this kind of thing systematic is that former students and classmates of big-name professors will suggest that their undergraduate students work with Prof. Bigshot and back it up with glowing letters of recommendation. So while I understand this sort of thing happening on the margins, I do not advocate it as a general solution.

I will note, however, that biologists suck at math

Or that people who suck at math and want to pursue an graduate STEM degree tend to go into biology as opposed to physics. Or people who suck at math and would want to do physics realize that pursuing a graduate degree would be foolhardy, and instead go into the private sector or venture capitol, rather than going for the Ph.D.

You can’t neglect the factor of self selection in the datasets – the groups you’re averaging over (subject-wise) are the people who have the desire to get a graduate degree in the given subject, and think they’ve got a decent chance at doing so.

Also – and I’m not trying to defend the GRE – a question that comes up in my mind is whether it’s really the case that the GRE is biased against women and minorities, or is it more the case that women and minorities are being less well prepared for graduate school prior to taking the GRE. The linked article takes the racial/gender discrepancies of the GRE as prima facie evidence that the GRE is an inadequate metric of ability. An alternate explanation of the discrepancy is that the GRE accurately measures existing discrepancies in preparation. (An assertion that I, too, raise eyebrows at, but one that the authors seem to completely neglect.)

That said, the authors do offer evidence that their GRE-free programs give good success, but it does sound like these are special programs, likely with special help and extra support for participants. Would similar results be obtained for “regular” graduate programs? Insufficient data is provided. Poor GRE may not be indicative of success – but only given appropriate support for those coming in with less developed “quantitative reasoning” skills — which would be something critical to know for people thinking of switching to a GRE-free model.

First, I figured if you’d be blogging anything from the latest Nature it would be the EXO result.

As to why the GRE general test is used in admissions to physics grad programs, let’s break this discussion down by section.

Quantitative: The quantitative section of the general GRE, last I checked, was high school math. Many physics admissions committees probably believe that if you have a physics degree but aren’t acing a test of high school math, there is a serious problem and you shouldn’t be getting a graduate degree in physics. There are two possible ways to change the thinking of admissions committees on this: Persuade them that the GRE quantitative section is not a good measure of mathematical skill, or persuade them that mastery of math at the level of the GRE quantitative test isn’t a good predictor of graduate school success. I think the first point is one that we can consider, but the second is probably a non-starter. (Though, like you, I’m not in a department with a grad program.)

Verbal and analytical writing: My impression was that these don’t get much weight in physics departments as long as you’re above some modest threshold. Interestingly, my observation is that among the people who complain the most about the use of the quantitative section and physics subject test, there’s actually a certain amount of sympathy for the verbal section. Occasionally one of them recites some folklore that the verbal section is an OK predictor of grad school success, and they seem to be OK with that. It might be because they’ve staked so much of their argument on the other sections not being good predictors so they sort of have to stand by a good predictor. (I don’t know if it actually is a good predictor, but they believe that it is, and the psychology of their response is interesting.) Or it might be that verbal and writing skills are often labeled “soft skills” and the critics of the GRE are coming at this from a consideration of people and diversity, so they value “soft skills”.

Again, it might even be that the verbal and analytical writing sections are NOT good predictors of success in physics grad school. But some people believe that they are, and the response is interesting. Especially since these sections would seem to be more vulnerable to cultural bias than the quantitative section and the physics subject test.

Or that people who suck at math and want to pursue an graduate STEM degree tend to go into biology as opposed to physics. Or people who suck at math and would want to do physics realize that pursuing a graduate degree would be foolhardy, and instead go into the private sector or venture capitol, rather than going for the Ph.D.

I agree, that’s a part of this. I think there’s something similar at work in the engineering scores being higher, on the whole, than the physical sciences ones. Undergraduates with engineering degrees have reasonably good employment prospects without further training, and thus less of a need to go to grad school, so only the really top students bother. Which gets you higher scores on average, even though I think most physicists would say that undergrad engineers are less mathematically gifted than undergrad physicists.

(The original “biologists suck at math” was, as always, intended to be at least slightly humorous…)

Also – and I’m not trying to defend the GRE – a question that comes up in my mind is whether it’s really the case that the GRE is biased against women and minorities, or is it more the case that women and minorities are being less well prepared for graduate school prior to taking the GRE. The linked article takes the racial/gender discrepancies of the GRE as prima facie evidence that the GRE is an inadequate metric of ability. An alternate explanation of the discrepancy is that the GRE accurately measures existing discrepancies in preparation.

That’s an interesting point. On some level, I suspect that may be a distinction without a difference, though. I’m not sure it really matters what level the bias creeps in at.

Somebody else noted elsewhere on social media that there’s an unexamined assumption in the argument, namely that measures that increase diversity will necessarily produce better researchers. The closest thing to evidence of that is the statement that “all students who have completed PhDs [at their program] are employed in the STEM workforce as postdocs, university faculty members or staff scientists in national labs or industry,” which is potentially a very good testament to their program (especially as these aren’t elite schools), but would depend a bit on the exact nature of those jobs.

That said, the authors do offer evidence that their GRE-free programs give good success, but it does sound like these are special programs, likely with special help and extra support for participants.

The article did have a buried link to the Fisk/ Vanderbilt Bridge Program website. It’s not clear to me whether this is related to the American Physical Society Bridge Program (who keep sending our department stuff), but it definitely seems to include additional financial support.

How well this approach scales is a major question. Does it require intensive resources that can’t easily be pulled together in other places? It’s not clear.

a question that comes up in my mind is whether it’s really the case that the GRE is biased against women and minorities, or is it more the case that women and minorities are being less well prepared for graduate school prior to taking the GRE

You’d need some good data to investigate this, but these two choices are not mutually exclusive.

I have long been of the opinion that what standardized tests like the GRE measure is the ability to take standardized tests. That the SAT can be and routinely is gamed is evidence supporting that view. Beyond here I can only speculate, but it would hardly be surprising if standardized tests tended to favor the dominant culture–in the US, that’s white males. Actual styles will vary among students, but a random white male student may be more likely to be close to the culture and educational style favored by the tests than a random female or minority student.

At the same time, there are many stories of white males getting superior mentoring to young women and minorities, even at the college level. I don’t have data handy, and of course not all white male professors are racist male chauvinist pigs, but AFAIK there are two circumstances under which minorities and women get mentoring that is reliably of the same caliber as what white males get: when the professor is female or minority, and when the college in question is one or more of all-female, HBCU, etc. It’s not a stretch to think that white males might, on average, be getting better advice about how to take the GRE.

In 1957, I received my BS while on scholastic probation. I had no idea of going to graduate school; but took the GRE because I do well on standardized tests and it couldn’t hurt. A couple of years later I was provisionally admitted to a MS program based on my GRE scores. Fast forward-I am now a Biology Professor Emeritus.

Social promotion to a surgeon holding a scalpel is madness. Your chart show not racial defect but instead absence of genius. Mediocrity is human SOP. Objective performance matters. Cull the herd. Do not invest in what is not.

If you fail basic math in the classroom, in the GRE, in the workplace, then you are only fit for management damaging the company but not its customers. I scored GRE 750/750, did the Chemistry test off the chart. 23andMe says I am a heritable winner. Seek diamonds in kimberlite, but do not reject them in river gravel.

No comment on the race or sex breakdown, but for pete’s sake, can we please break apart the more quantitative fields from the less ones in a more thorough way. Geology has picked up a lot of math (particularly as reservoir modeling software has advanced over the years), but, as the interviewers at one of the biggest seismic houses told me years ago, it’s a lot easier to teach physicists, mathematicians, and electrical engineer the geology required than it is to teach a geologist the math for seismic data processing.

Views like that indicate that fields can’t be lumped wholesale into “physical sciences” or “engineering” or “life sciences” so simply.

Couple of quick comments: the verbal GRE is considered to have some predictive power in physical sciences grad admissions, mostly because there is actually some spread in the applicants, and “because writing is important…”;

the quantitative math is not difficult, but there are a lot of questions and some of them are “trick questions”, which is what winnows out the people who really know the math and gives the spread, but there is very little spread generally for physical science applicants.

First, that argument about the salary earned by women (generally, based on the sentence structure) would also apply to men (generally). I don’t believe that women with a PhD in physics earn 1/5 of what men with a physics PhD earn. It is silly to compare the salary of a person whose highest degree is a BA in whatever to someone with a PhD. It might be interesting to compare salaries for a woman with a BS in engineering to a man or woman with a PhD in engineering. Maybe the smart ones get a job.

I was wondering if you can predict the GRE based on the SAT, and the internets gave at least one answer:

http://arxiv.org/abs/1004.2731

One issue with the quantitative part was something I noticed with the SAT quant when I took it in HS. Some of it was on material I hadn’t used in years because I was dual-enrolled taking calculus at the time. (An SAT prep class would have fixed that, but only really poor students did that back when I was in HS.) That could be even more true for someone already doing research in their senior year of college. You know the important things really well.

I recall one story from my youth of someone who came in with really high GRE subject scores because he had worked as a TA for freshman physics while a senior. He knew all that basic stuff cold but had trouble moving into research where there were no answers (or even questions) at the back of the book. That is a different skill set than what is measured on standardized tests.

That said, I have trouble imagining someone with a 550 math score being a productive researcher in theoretical physics, but less concern about areas in biology and chemistry that depend heavily on spatial skills and pattern recognition as long as the part they got right was relevant to their studies (algebra or statistics, rarely both).

First, it appears that physics majors in all racial and gender categories have a wide range of GRE quantitative scores: http://www.aps.org/publications/apsnews/201302/grequantscores.cfm

So they aren’t all that well-clustered.

Second, a sample GRE quantitative test is here, and appears to be testing things that physics majors ought to do well on:

https://www.ets.org/gre/revised_general/prepare/quantitative_reasoning

Now, there are two possible reasons why a lot of physics majors might not be acing this test:

1) They have strong math skills, but this test is a poor measure of those skills.

2) A lot of physics majors don’t have terribly good math skills.

Explanation 2 might seem odd to somebody who has never taught upper-division physics majors at a non-elite school…

It is also possible that this is a perfectly reasonable test, but a score cutoff of 700 (or whatever) is unreasonable, because the test is actually pretty subtle.

So looking at the APS data, whites and asians represent about 90% of those taking the GRE quantitative, and for those the spread is small, so generally the spread is small – which is why admissions ought to disregard GRE quantitative for admissions to physics – it has little predictive value for the vast majority of applicants and research suggests it is a biased indicator of success for the subset for which there is a spread.

The shocking statistic buried in these numbers is what the raw numbers of minority applicants to each department per year typically is. The GRE quantitative score reported is across all physical sciences, all intended applicants.

I read in a hurry (low verbal score for me!) and thought the APS site was only showing physics majors, not all physical sciences. My bad.

Alex@#11

Thanks for the link to the GRE design details. As I suspected, it looks pretty much like an SAT test, with things like permutations and combinations that I forgot before I was a junior in HS. The spread of scores on that test would tell you very little about a physics major other than whether they took a prep class and crammed things they will never use except on that test.

Also, as Alex corrected @#13, the first link is still just data for all physical science majors, not physics majors. It would be nice to see data that separates physics from chemistry, geology, biochemistry, astronomy (not just astrophysics), meteorology, oceanography, etc. Some of the subfields in those other physical sciences are heavily mathematical while others are not or emphasize topics like statistical inference that are also not on the GRE.

Do they have that? I’d guess not or the APS article would have used them.

Interestingly, ETS does break down scores by intended field of graduate study in this document:

http://www.ets.org/s/gre/pdf/gre_guide.pdf

Admittedly, some physics majors go for graduate study in other fields, but this is what we have to work with. On the new scale, about 90% of physics majors get a score of 155 or better (700 or better on old scale) and about 65% get 160 or better (760 or better on the old scale).

Differences within this range might not mean much, but falling below that range tells us one of two things, depending on what you believe about the test:

1) That student has quantitative skills well below most physics majors. Probably because of poor preparation rather than lack of inherent talent. Still, a person with weak quantitative skills is is not somebody you want as your grad student.

2) The test is arbitrary and biased and the low score is telling you nothing.

I suspect there is some truth to the second argument, and yes, there is always the individual with test anxiety or somebody who was sick that day or whatever. However, leaving aside anxiety cases day and allowing for a retest if a relative died the day before or whatever, I don’t quite believe that completely bombing a test of high school math is an event with zero information content. Not when you are a physics major.

Indeed, I would say that when designing an experiment or not interpreting data, some of the questions on the quantitative GRE are probably more relevant than the ability to manipulate Hermitian operator identities.

Thanks. That is really interesting!

Oh, I definitely believe the quant test is not biased. It measures what it sets out to measure. And I definitely agree that you might think twice about someone who had a score below the old 700 unless you also saw that they had similar SAT scores in HS and yet did fine in their math and physics classes at a reputable college.

Your tough challenge is to decide if ranking within that range includng 90% of physcis grads is relevant to success in your program and improving its reputation. Do you do any post-hoc analysis of admissions data against performance? Can you measure “grit” and “slack”?

I forgot to say what was “interesting”.

One third of the Physical Science data is for computer science and information science, where 8% fall below the 145 line. Earth science (15% of the total) and chemistry (17%) have 6.3% and 3.3% below that line, respectively, while physics (15%) and math (20%) have 0.6 and 0.7%, respectively. The long tails seen in the aggregate data are from the least mathematical of the physcial sciences.

The 145 line is around a 530.

Whatever you do, do not look at education administration (31.3% below that line) or education evaluation and research (32.7%). Scary when you think about the kinds of analysis they have to do.

Your tough challenge is to decide if ranking within that range includng 90% of physcis grads is relevant to success in your program and improving its reputation.

Ranking within 90% of physics grads is probably necessary, but I am dubious on using the GRE quantitative section to do it.

The thing I keep going back to is that, for all the problems with the GRE, I’m not sure the critics have thought through the alternatives.

Grades? Grades are as variable as graders. Unless the students are coming from a school that you have experience with (which brings its own set of “old boys network” issues), you never really know if that A came from a professor who gave easy tests or hard tests. At least with the GRE you know that all of the students took the same test. It shouldn’t be the only measure, but it can provide some context. A strong GRE coupled with mediocre grades might even help weed out the “smart but lazy” types that you don’t want as a research assistant.

Resumes? Extracurriculars? Research experiences? Resumes can be padded, you never really know what the “communications chair” for the Physics Club actually did, and you never really know if the middle author on the paper did much.

Rec letters? Ideally, those can provide context to help sort out what a student actually did in a research experience, what issues they were struggling with outside of class, etc. In practice, though, there are studies showing that a lot of faculty write differently in letters for male and female students. Adjective choice and attributes/topics emphasized depend on gender.

Personal statements? Ideally, those can provide context. However, again, students from disadvantaged backgrounds don’t always have the same writing preparation, or access to essay coaching, as students from more advantaged backgrounds.

Institutional reputation? Reputation of letter writers? I can think of no better recipe for an “old boys network.”

In the end, if you want to admit more disadvantaged and under-represented students, then you should admit more disadvantaged and under-represented students. To the extent allowed by law, practice affirmative action. If you are looking at a measure other than disadvantage, then people with advantage will find a way to compete on that measure. (Even on measures of disadvantage, affluent suburban kids will start writing essays on the travails of growing up with ADHD or whatever, but at least you can calibrate for that.)

If some are concerned about certain demographic groups being underrepresented in the hard sciences and math, why aren’t we developing more programs to target underperforming math students at ages 12 to 14? Quite a bit of research shows it is in those years that a large part of students – especially females – effectively “give up” on math and so enter high school unable to handle physics, geometry or calculus.

Alex@18:

Excellent points on the old-boys-network aspect of most alternatives to standard tests. There was one proposal some decades ago that top schools should just set a minimum threshold (for undergrad admission) and pick randomly from the pool. That avoids the problem of having a sharp cutoff based on an imperfect instrument.

I happen to believe in data-driven decisions, which means doing some blind studies of how students with different levels of preparation pan out. But that requires taking some risks (for you and for them) to get the sample you need. That is hard to do when grants and tenure hang on how well those students do.

Thanks again for that pointer to those data, and for (I assume) posting it in the IHE thread on this subject. I should make up a graph of them sorted by major and blog it.