Through some kind of weird synchronicity, the title question came up twice yesterday, once in a comment to my TED@NYC talk post, and the second time on Twitter, in a conversation with a person whose account is protected, thus rendering it un-link-able. Trust me.

The question is one of those things that you don’t necessarily think about right off– of course an atom is a particle!– but once it gets brought up, you realize it’s a little subtle. Because, after all, while electrons and photons are fundamental particles, with no internal structure, atoms are made of smaller things. But somehow we get away with thinking of them like single particles when talking about things like cooling clouds of atoms to BEC. We trest rubidium atoms as bosons, even though they’re really collections of a hundred-odd fermions, and we talk about them having a single de Broglie wavelength despite being a big assemblage of other stuff. But it clearly works, as nearly 20 years of BEC experiments demonstrate. So how do we get away with that?

I had never really thought of this question before Eric Cornell brought it up in a talk at a conference– I think it was a Gordon Conference, but I’m not sure– and happily, he explained it very nicely. The rule of thumb is that you can safely describe something as a single particle as long as the energy of the particle and the stuff it interacts with is smaller than the binding energy of its component particles.

“Binding energy” is a term of art that means, roughly speaking, “the energy you would need to put in to pull a piece out.” If you pick a random atom, the binding energy of its most loosely bound electron is probably around 10 eV worth of energy, or 0.0000000000000000016 joules. That’s the gravitational potential energy of a 1-gram mass lifted up about the diameter of an atomic nucleus, or a baseball with a velocity of a few nanometers per second. But it’s a huge amount of energy to pack into the space of a single atom, enough to rip the atom apart.

So as long as you’re dealing with particles having less energy than that, you can safely treat atoms as single particles, albeit with some internal energy states. A single photon with energy less than the ionization potential– basically anything with a wavelength longer than a couple hundred nanometers, in the deep UV range– might move an electron from one state to another inside the atom, but that doesn’t change the single-particle nature of the atom any more than flipping an electron from spin-up to spin-down changes it. And as long as the kinetic energy of the atoms in your sample is less than that, collisions between atoms aren’t going to break them apart, so you can think of them as single quantum particles.

How much kinetic energy do atoms have? Well, the temperature of a sample is, in the simplest description, a measure of the average kinetic energy of the particles, with the conversion from temperature to energy given by Boltzmann’s constant kB which is 1.381×10-23 joules per kelvin (fun fact: this is the one major constant I have trouble remembering. I know the digits, but regularly screw up the exponent, for some reason, including at least once when writing a homework assignment…). That’s maybe not the most illuminating number, but there’s a convenient rough conversion that AMO physicists like me tend to know, which is that room temperature is about 1/40th of an eV. That’s room temperature in Kelvin, mind, so to get the thermal energy up to the point where you have to really worry about the component particles of atoms, you would need 400 times 300K, or 120,000K. So as long as you’re not working on the Sun, it’s probably safe to consider atoms as single particles.

(Now, there are lots of scenarios where other factors complicate this– I got my Ph.D. studying ionizing collisions in metastable xenon, where the atoms were placed in an internal state with a lot of energy, so two atoms together had the energy needed to ionize one. Other experiments use things like two-photon ionization, where a single photon doesn’t have enough energy to blast an electron out of an atom, but two of them arriving at the same time do. In those situations, you need to worry a bit about the details of the internal structure, but in a pretty minimal way.)

The energy scale involved changes for different situations, but the arguments remain essentially the same. Atomic physicists essentially always treat the nucleus of an atom as a single particle, despite the fact that it’s made up of protons and neutrons, because the binding energy involved is vastly greater than any energy we deal with. You need energies thousands to millions of times greater than the ionization energy to break a nucleus apart, so for our purposes, it’s a particle. Again, a particle with some internal states– you can flip nuclear spins and that sort of thing– but a single lump of stuff with a mass and spin determined by the sum of all its components.

In the other direction, many molecules have binding energies lower than those of atoms, so the energy needed to rip an atom out tends to be less than the energy needed to rip an electron out of a free atom. But it’s not a huge difference– the energy involved tends to be on the several-eV sort of scale still, so well into the ultraviolet. That is what makes UV light somewhat dangerous, though– the photons have an energy that’s high enough to break some organic molecules apart, and damage living organisms as a result. Thermal energy continues to not be an issue, though.

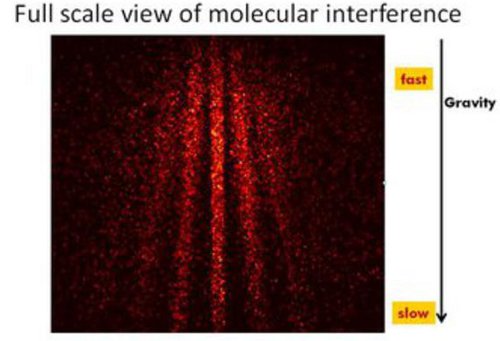

This is why Markus Arndt’s group in Vienna is able to do amazing experiments with interference of large molecules, including seeing diffraction patterns with 430-atom organic molecules and using single-molecule detection to watch the diffraction pattern build up (the “featured image” up top is from this page, and is a version of the data featured in that second paper). Even though their molecules are evaporated from an oven at (probably)500-600K (they don’t cite a temperature, but point at this paper), the energy of the individual molecules is low enough that they can really be thought of as single particles. These are particles with huge numbers of internal states– they talk about “1000 degrees of freedom” in promoting the paper– but that doesn’t prevent them from showing wave behavior. Each molecule interferes with itself, and as long as you can keep changes in the internal state from producing a large shift in the pattern, you can still build up a terrific interference pattern. These are just awesome experiments, by the way.

(Full disclosure: I met Arndt at a conference in Europe back in 2001, and he was very helpful when I contacted him to get permission to reprint one of his graphics in my first book, suggesting a better graph than the one I had been planning to use.)

You can continue this down to much more tenuously bound systems. As I said, I hadn’t thought about this until Eric Cornell talked about it, and he brought it up in the context of Cooper pairs in ultracold gases, which is in turn an analogy to the BCS theory of superconductivity. In this theory, electrons in a superconductor (or atoms in a gas of ultracold fermions) can “pair up” through weak interactions with other particles in the system, forming composite particles that are then treated as bosons. The superconductivity transition can be thought of (fairly loosely) as forming a BEC of these Cooper pairs (after Leon Cooper, the “C” in “BCS theory”).

Cooper pairs in superconductors have binding energies in the milli-electron-volt range, so well below the level of room temperature thermal energy. This is why superconductivity is a low-temperature phenomenon– you need to get the thermal energy down low enough that you can safely treat the weakly bound pairs as single particles, and not worry about them falling apart spontaneously. In ultracold atom systems, the binding energies are even lower, thus the need to be in ultracold systems. The same interactions could, in principle, pair up atoms at higher temperatures, but the pairs would get broken up as quickly as they formed, so a particle description just doesn’t make any sense.

So, that’s a longer than strictly necessary description of how and why we can get away with thinking of atoms and molecules as single particles, even though they’re made up of smaller things. It all comes down to the energy available in the problem, and as long as you’re dealing with low enough energy to keep your sample from breaking apart, it’s okay to talk about even extremely complicated objects as if they were simple (albeit quantum) particles.

——

(Astute observers might notice that there’s one aspect of this I didn’t talk about, namely why these composite particles have a de Broglie wavelength equal to what you would expect for a single particle of that mass, when they’re composed of many much smaller particles whose de Broglie wavelengths would be many times longer– a single atom in one of Arndt’s big floppy molecules ought to have a wavelength around 400 times longer than the wavelength of the whole molecule, but the wavelength describing the interference pattern is the short molecular one, not the long atomic one.

(I didn’t talk about this because I don’t have a great answer. If you put a gun to my head an insisted that I make one up, I’d wave my hands and talk about Fourier series– maybe when you add all those component wavelengths together in an incoherent way, you end up with something that looks like a shorter wavelength. But I haven’t ever seen that bit explained, or thought about it all that much, so I don’t know. This will likely bug me for a while, though, and if I come up with anything, I’ll be sure to post about it.)

So in response to your bracketed comment… I’ve admittedly never worked out the details of this, but I suspect is that you can just choose the center of mass as your degree of freedom just as you would in classical physics and that justifies treating a complicated atom as a point particle.

If you work in the Heisenberg representation, then instead of using X_i (i=1,…,N where N is the number of particles) as the operators that you evolve, you could use the center of mass X_CM and the relative positions X_12, X_23, etc as your degrees of freedom. Then since Heisenberg’s equations are just the classical equations of motion, the Heisenberg equation for the center of mass operator should be the same as the classical equation of motion for the center of mass: namely, X_CM should act like a point particle, with a mass given by the total mass of the system, that only responds to the external potential. What gives me hope that there won’t be issues about things not commuting is that the center of mass operators is just a linear combination of the original position operators and doesn’t involve any momentum, but I’ve never worked through it so maybe you pick up hbar-suppressed correction terms somewhere.

Then if you go back to the Schrodinger picture, the wave function for the center of mass should act like a single quantum particle, with a mass given by the total mass of the system. Then the de Broglie wavelength for the center of mass wave function would obviously be given by the total mass of the object.

Then the idea would be that, so long as the energy of the whole system is dominated by the center of mass degree of freedom, then it is safe to treat the whole object as a single point particle.

I know it works out fine in the case of two particles, there’s a problem about this in Griffifths (5.1) for the case of 2 particles.

@Andrew: I think you have to be careful about how you do that calculation. For the N-particle case you have 3N total degrees of freedom: three for the CM, three for angular orientation (assuming the molecule is not linear), and the remaining 3N-6 are vibrational modes. Since the angular orientation operators (like the others) are linear combinations of the individual particle position operators, that shouldn’t be a problem (but if you screw it up, you could get angular momentum operators in there). The question is what to do with the zero point energy of those 3N-6 harmonic oscillators. I think that’s a solvable problem involving a suitable definition of reference energy, but like you, I haven’t actually done it. Note, however, that these modes do have a zero point energy, whereas the CM and orientation operators do not.

Just wanted to say I really liked this post. I just gave a guest lecture talking about energy scales for our physical chemistry lab class and I think I blew the students’ minds by using K as a unit of energy. The exponent of k_B is pretty easy to remember because it’s about equal to 1/N_A (Avogadro’s number), which will get you to 10^-23. That’s because k_B = R/N_a where R is the ideal gas constant. R is about 8 when the units are in J and N_A is about 6e23, which will give you about 1e-23. That’s how I remember it, anyways. The number I personally find more useful is k_B = 0.7 cm-1/K, but that’s because I did infrared spectroscopy for my PhD.

Chad,

About that wave business at the end of your post:you might start with plancks law that energy is proportional to frequency. The mathematical principle would be that the sum of two sine waves is equal to a product of sine waves with frequencies being the sum and difference of the frequencies of those two waves.

Perhaps your readers will find Walter Levins’ Waves and Vibrations lectures a helpful refresher.

http://web.mit.edu/physics/people/faculty/lewin_walter.html

The relevant lecture would be #8

https://www.youtube.com/watch?v=_Fz0PSbew0g