A few months back, I got a call from a writer at a physics magazine, asking for comments on a controversy within AMO physics. I read a bunch of papers, and really didn’t quite understand the problem; not so much the issue at stake, but why it was so heated. When I spoke to the writer (I’m going to avoid naming names as much as possible in this post, for obvious reasons; anyone I spoke to who reads this is welcome to self-identify in the comments), he didn’t really get it, either, and after kicking it around for a while, it failed to resolve into a story for either of us– in his case, because journalistic reports need to have a point, in my case because I didn’t have time to write up an inconclusive blog post.

This has nagged at me for a while, though, and last week at DAMOP, I took the opportunity to ask a bunch of people who seemed likely to have opinions on the subject about it. I’m not sure the result is any more conclusive than it would’ve been some months back, but I have some free time right now, so I’ll write it up this time, and see what happens. Again, I’m going to leave the details of responses a little vague, in hopes of not getting anybody other than me in trouble; I’m not a professional journalist, and didn’t solicit quotes as a reporter, so it would feel wrong to directly attribute words to specific individuals in writing it up on the blog. Ultimately, I’m giving my own take on the matter after discussion with a bunch of people who know the specifics better than I do, and this shouldn’t be taken as more than that.

The whole thing starts with a Nature paper from 2010 by Holger Müller, Achim Peters, and Steven Chu (because writing Nature papers is how Steve Chu unwinds) that sadly is not on the arxiv, because Nature. This paper re-interpreted some earlier results that used the interference of atom waves to measure the acceleration of gravity (from 1999 and 2001) as a measurement of the gravitational redshift instead. This was followed not long after by a comment from a French group including Chu’s co-Nobelist Claude Cohen-Tannoudji. This drew a response, then a counter-response, and a back-and-forth argument that got fairly heated for scientific literature, and can be traced in the citation history of the original paper. The Clash of the Nobel Laureates angle to the whole thing gave it a little media juice, and one or two of the papers sound annoyed enough to make me suspect that if either side had access to a kraken, it would’ve been released long ago.

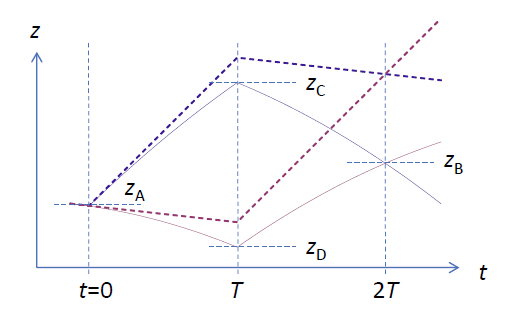

So, what’s going on, here? The experiments at the heart of the whole business use an atom interferometer, shown schematically above in a figure taken from the arxiv version of a Phys. Rev. Letter by Mike Hohensee and the Chu group (RSS readers need to click through). The idea is that you start with a bunch of atoms at the lower left at time zero, split them in half, and launch half of them upward. Some time later, you stop the bunch that was launched, and launch the bunch that was stopped. When the two bunches get to the same position, at the upper right, you mix them together, and look at how many come out on the two possible exit paths. Since quantum mechanics tells us that atoms behave like waves, there will be an interference pattern here that depends on the “phase” of these waves, or more precisely on the difference in phase between the two.

In the absence of gravity, the two paths followed by the atoms should be more or less identical, and correspond to the straight dashed lines in the figure. When you turn gravity on, the atoms move on the curved paths instead, and you find that the phase difference between them depends on the strength of gravity; thus, you can use this to measure the strength of gravity. This was demonstrated by Kasevich and Chu back in 1991 when dinosaurs roamed the Earth and I was a callow undergrad. (Full disclosure: I worked for Kasevich as a post-doc at Yale in 1999-2001.)

That was a nice paper, and everybody was happy with it and the two better measurements in 1999 and 2001. The key to the 2010 paper by Müller et al. is a re-interpretation of the basic scheme. Rather than thinking of the atoms as waves undulating along these paths, they pointed out that you could think of the atoms as little clocks, oscillating at a frequency known as the “Compton frequency.” These clocks, according to relativity, “tick” at different rates depending on their state of motion and their position relative to the Earth. In this picture, the atoms on the upper path “tick” at a different rate than those along the lower path, and the result of this difference is a phase shift that shows up in the interference pattern. Thus, the measurements Peters and company made in 1999 and 2001 can be viewed as a measurement of the “gravitational redshift,” and a test of relativity.

That re-interpretation ruffled a lot of feathers, for reasons that were unclear to me. In the end, the simple calculation of the gravitational redshift ends up depending on exactly the same strength-of-gravity parameter g as the acceleration measurement, so it seems like just a matter of terminology. Somewhat more formally, you can cast the phase difference that you measure in the experiment mathematically as the sum of three terms, one having to do with the interaction between the lasers used to push the atoms around, and the other two having to do with the motion of the atoms. When you work it all out, it turns out that you can make the laser-interaction term exactly equal to one of the other two, and which one you pick determines whether you call it an effect of acceleration on the atoms, or an effect of gravity on the internal “clocks” of the atoms. Either way, the difference is the same, and tells you something about gravity.

My initial take on this was that the negative response was prompted by the fact that it seems kind of cheesey to get a second Nature paper out of re-analyzing ten-year-old experimental data. Had this been an entirely new experiment, I would’ve said “Hey, cool!” but since it was just a new interpretation of old results, it was more “That’s cool, but…” This doesn’t explain the vehemence of the responses, though, or the way it dragged on through a couple of years of dueling papers.

So, what was the result of my asking around? First and foremost, the dominant reaction I got when I brought this up was “Oh, God, not this mess…” Even people who went on to give strong opinions one way or the other started by rolling their eyes at the whole controversy; a few refused to talk about it at all.

Among those who went on to give opinions, the general responses can be broken into two classes: funding, and personalities. The funding argument is basically that the mostly-European groups that have objected to the reanalysis have a vested interest in keeping the interferometer from being seen as a redshift measurement, because there’s a European mission to make gravitational redshift measurements in space, and if you can do just as well on the ground, that puts millions of Euros of grant money at risk. The personality argument is basically that while the interferometry experiments are very clever, the clock/redshift interpretation is overselling them in a way that bothers some people; Cohen-Tannoudji in particular is seen as a very level-headed guy, not prone to overhyping matters, which inclines some people toward his side.

Outside and between these two camps is a third view, which I’ve moved toward after last week’s conversations, which is basically the “shut up and calculate” analogue: that whether you call it a test of redshift or an accelerometer, what you’re really doing is looking for a violation of the Equivalence Principle. The name you give it is just semantics, and what’s important is that a) the Equivalence Principle works, and b) testing it at high precision is Really Cool. The controversy has cooled off significantly, and there may be some movement toward, if not a reconciliation of the two views, than at least something along these lines.

Of course, lots of new stuff could happen. In particular, Müller and company earlier this year doubled down on the clock interpretation of the interferometry experiments with a Science paper on a “Compton Clock” (not on the arxiv, because Science). This is based on the observation that, if you tilt your head and squint, you can write the required laser frequencies for the the interferometer experiment as fractions of the Compton frequency (which is around 1025 Hz, ten billion times higher than the laser frequency, give or take). The Compton frequency depends only on the mass of the atoms, so if you view everything as a fraction of that, stabilizing the lasers based on the interferometer signal amounts to referencing your “clock” directly to the mass of the atoms.

This is only indirectly related to the earlier controversy, though it recapitulates the essential features: it’s a really cool experiment, based on a clever idea that strikes a lot of people as over-selling. Questions about the “Compton clock” got even more eye-rolling than questions about the original controversy, with a lot of people thinking it’s basically too clever for its own good.

For their part, Müller’s folks stick to their guns on this– a person I spoke to from that group insists that there aren’t any other frequency sources involved, and that the “clock” frequency they get is extremely repeatable and comes naturally out of the experiment, suggesting it really is something to do with their atoms. Unfortunately, they’re not really set up to do the test that would be most convincing: their interferometer uses cesium, which has only a single abundant isotope suitable for the experiment. As with so many other experiments in AMO physics, this would be much better if they used rubidium, which has two abundant isotopes, rubidium-85 and rubidium-87. In that case, they could run the “clock” for both, and show that the fraction they measure differs by 2/87ths, an amount that really wouldn’t show up anywhere else.

Of course, the unfortunate reality is that Müller’s lab is only set up to work with cesium, while the obvious lab to do this in rubidium is run by one of the European groups opposed to the whole idea. So I wouldn’t expect a completely dispositive resolution of this any time soon. It will probably remain a source of (low-level) controversy for a good while yet…

And that’s what people were arguing about at DAMOP last week.

I always claim that the gravitation red shift is just relativity’s way of letting you see potential energy. If you do the Newtonian calculation, indeed, a photon that appears one shade on earth will appear much bluer if you raise it 500km. In fact, the wavelength shift matches the potential energy integral perfectly.

Wouldn’t the “proper time along the world-line” calculation be in principle more accurate than a “phase difference in a Newtonian set-up with gravity”, though perhaps identical as far as achievable experiments are concerned?

I wonder if there’s some deep fundamental undercurrents driving this “debate”. For example, when you raise a brick you do work on it, and its mass increases. Ditto for an atom. Mass in GR is not invariant. And see http://link.springer.com/chapter/10.1007%2F3-540-40988-2_19 :

“SpaceTime is a mission concept developed to test the Equivalence Principle. The mission is based on a clock experiment that will search for a violation of the Equivalence Principle through the observation of a variation of the fine structure constant…”

That would make a gravitational field a region where the electromagnetic and strong coupling don’t march in step, and nothing more. That’s unification. By the way, a photon’s frequency doesn’t change in a gravitational field. It only appears to be blueshifted because your clocks are going slower when you’re lower. It doesn’t actually gain any energy. Turn a 1kg mass into light and throw it into a black hole, and the black hole mass increases by 1kg.

Ahhh – more empty Nature/Science papers where academics increase their citation/publication count with made-up pseudo controversies. That also allows us to neglect the real controversies on the shit they publish, like the memristor fraud. You are a tool, Chad.

Excellent summary (full disclosure – I’m a member of the Muller group, but not involved with the “controversial” papers). I’d point out a later paper (http://arxiv.org/abs/1102.4362) that is more in the vein of “shut up and calculate”.

A couple points in regards to the Compton clock paper – it really is more than just rewriting equations. This type of experiment is typically called a “recoil” measurement. The atom interferometer measures a frequency proportional to the recoil an atom experiences when it absorbs a photon. As one might guess, this frequency depends on both how heavy the atom is and how energetic the photon is. For the Compton clock, the photon energy dependence is removed. This is demonstrated in the paper – unlike in the recoil measurement, the photon energy can be changed without affecting the measured frequency, which now depends only on the mass of the atom. This measurement agrees with the accepted value for the mass of a cesium atom at a part per billion precision, so there actually isn’t need for a demonstration with two isotopes. However, with further improvements, this method could actually become the most precise way to measure isotope mass ratios which are currently at the part per billion level.

I took a look at that paper, and whoa:

“Gravity makes time flow differently in different places. This effect, known as the gravitational redshift, is the original test of the Einstein equivalence principle that underlies all of general relativity; its experimental verification is fundamental to our confidence in the theory”.

Time doesn’t literally flow. Clocks don’t literally measure the flow of time, they feature some regular cyclical motion which they effectively count, giving a cumulative display called “the time”.

Gravity per se doesn’t make clocks go slower or faster, a concentration of energy (usually in the guise of planet made of matter) “conditions” the surrounding space setting up a gradient in motion/process rates within it. As a result light curves, and then as a result of the wave nature of matter, things fall down.

The equivalence principle does not underlie all of general relativity, it’s a mere lead-in that employs a region of infinitesimal extent. Of zero extent. Of no extent! Breaching the equivalence principle does not undermine our confidence in GR. See Pete Brown’s essay at http://arxiv.org/abs/physics/0204044 and note the comments by Synge and Ray on page 20.

from Anonymous:

“For the Compton clock, the photon energy dependence is removed. This is demonstrated in the paper – unlike in the recoil measurement, the photon energy can be changed without affecting the measured frequency, which now depends only on the mass of the atom.”

I don’t think this is true. First of all, the frequency w_m still has N in it, which characterizes the laser frequency. Second, in Fig. 3, the measured w_m does indeed change; only when it is multiplied by (2nN^2) (the curve referenced to the right axis) is the data ‘constant’. The only new feature that I can figure is that the laser used for the interferometer is self-referenced and stabilized – in that way there is no need to make a separate laser frequency measurement to determine h/m (or h/mc^2), as is done in past recoil measurements. Of course, a common way to make a laser frequency measurement is to use a frequency comb to determine its frequency with respect to a known microwave or radio frequency.

Re: Compton Clock

I also don’t see how the two isotopes would resolve anything. The issue as I see it is that they are essentially measuring the recoil frequency, from which h/m (the ‘Compton frequency’ is just this ratio divided by c^2) can be determined once the laser frequency is measured; instead of explicitly measuring the laser frequency, they use a self-referenced frequency comb to enable feedback from the interferometer phase to the frequencies in the system, effectively making an ‘implicit’ measurement of the laser frequency … so as far as I can tell the only thing new about the ‘Compton clock’ is this implicit vs explicit determination of the laser frequency, and the fact that there is a physical output that has been stabilized. This last part works because of a clever arrangement where there is one frequency from which all of the laser frequencies, detunings, etc are derived.

If there are two different isotopes being used, one would expect to see the different frequencies because it is essentially an h/m measurement, as other past atom interferometer systems have been.

I wrote about the Compton clock here.

Chad Orzel wrote (on June 13, 2013):

> […] think of the atoms as little clocks, oscillating at a frequency known as the “Compton frequency.” These clocks, according to relativity, “tick” at different rates depending on their state of motion and their position relative to the Earth.

Surely the theory of relativity itself is not concerned with expectations about any specifics (such as ”Compton frequency”, or “mass”) of “Earth” and/or of any particular atoms, in any particular trials.

It merely provides terminology and quantities to be measured (such as “frequency”, a.k.a. ”tick” rate) in order to express various models; for instance the model, mentioned above, that the ”Compton frequency” of different cesium atoms differs (significantly) “ depending on their state of motion and their position relative to the Earth”; or in contrast for instance the model that the ”Compton frequency” of different cesium atoms (specificly of Cs133 atoms) is very accurately constant and independent of “ their state of motion and their position relative to the Earth”.

Of course, at least one of these two described models is false; but it should not be controversial how to carry out experimental tests to determine which these two described models are false …